It is a truth universally acknowledged that when it comes to artificial intelligence, you must follow one rule: Use AI, but don’t make it look like you used AI.

First, delete your en hyphens. Next, replace the word ‘delve’. Avoid this sentence structure: ‘Not only X but also Y’. But most importantly, avoid AI in internal communications where authenticity underpins trust.

Trust. It’s the currency of public relations; the foundation of multimillion-dollar relationships built on the exchange of sensitive information to the right people at the right time. However, as we incorporate AI into more of our work, there are bound to be risks. How could AI-generated content harm real-world institutions? And where does human judgment remain indispensable?

At HAVAS Red, one of my primary responsibilities is environmental scanning, monitoring and filtering news that shapes our clients’ reputations and operating environments. My favourite task is writing The Daily Tea, a tailored newsletter covering how AI is reshaping Australia’s corporate, banking, and healthcare sectors. Nothing more excites me as embodying Lady Whistledown sending piping hot tech gossip.

But as headlines warned of widespread automation and job displacement, I started to doubt to what extent AI was ‘better’ than humans. So, I challenged AI: Can you automate me? Can you write a better Daily Tea?

It was the worst edition ever. Outdated stories, broken links, tepid copy, fake academic references – all the culprits that forced Deloitte to refund the government over a $440,000 botched report containing AI errors. Or what led to a $202,000 NDIS claim being rejected, denying vulnerable people the support they needed. Or what disbarred a principal lawyer.

These cases are just the latest in a concerning trend of practitioners submitting reports using AI, warning us of the perils posed by unverified AI outputs.

This matters to HAVAS. Indeed, as it does to any PR agency worth its salt. If we delivered content based on false information, clients would stop trusting us. If journalists detected AI-generated pitches lacking originality or accuracy, they’d disengage; after all, they could just generate one themselves. As generative AI has surged into mainstream use, we’ve seen the risks: without human oversight, credibility is diminished.

As Communication professionals, we always look beyond words. At the heart of our labour lies our ability to create, mediate and deepen human relationships. What we produce is more than just an inert set of texts – it’s the product of human interactions (Carah & Louw, 2015).

It is this professional responsibility that makes ‘Agency Intelligence’ more vital than ever.

HAVAS’s ‘Agency Intelligence’ places human creativity and strategic thinking at the forefront of public relations and communications. While AI offers valuable time-saving tools, it’s the critical human intellect that drives meaningful brand storytelling and innovation.

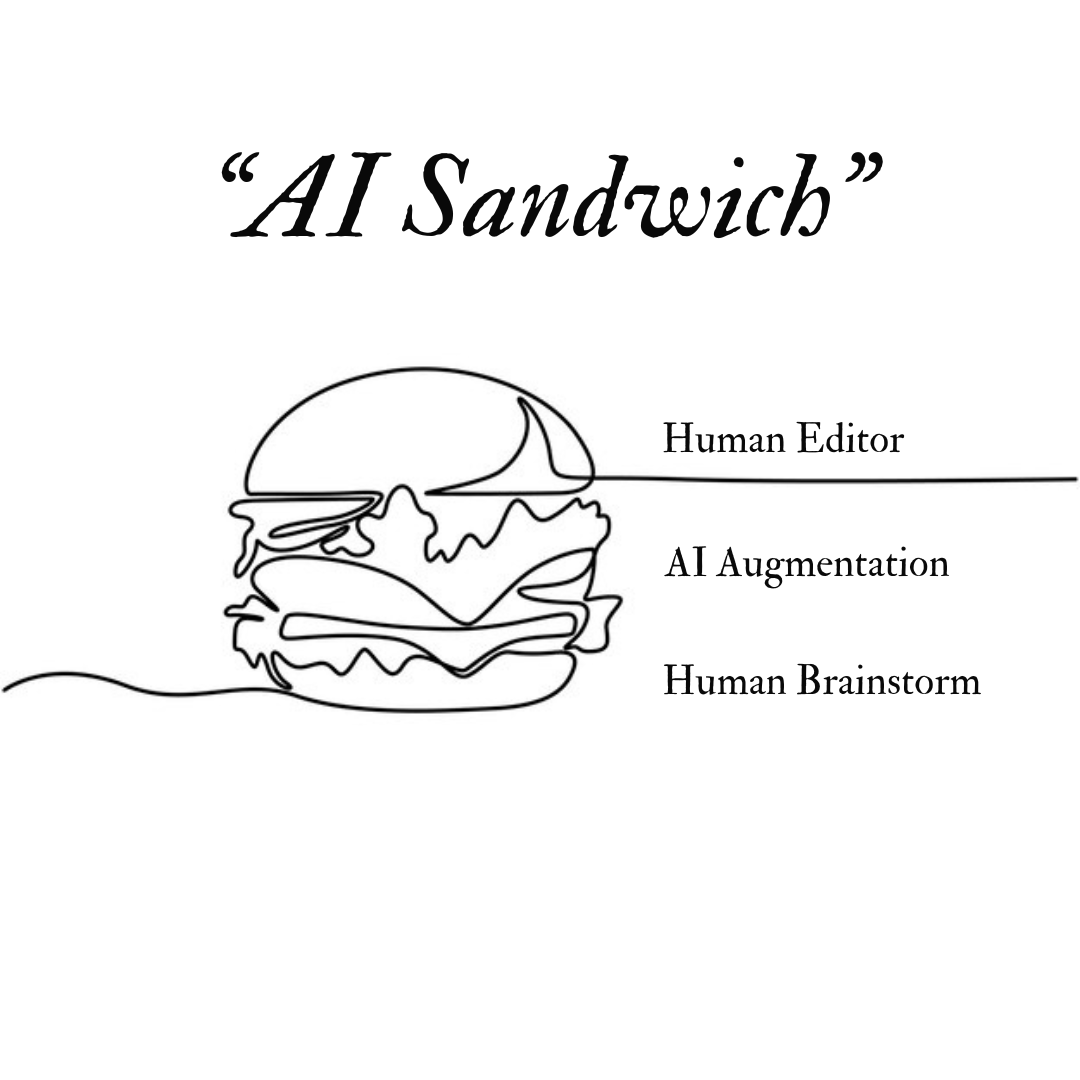

In practice, ‘Agency Intelligence’ can be as simple as the “AI Sandwich” method. Here’s how it works: human thinking serves as the anchor, AI expands our information database and helps shape content, and finally, human intervention oversees the final content.

Research reinforces this “sandwich” method. A study from MIT revealed that writers who used AI only at the end – after conducting their own research and developing their own ideas – outperformed those who relied on AI from start to finish. In using Artificial Intelligence for Strategic Communications, Sutherland (2025) cautions that because AI often produces verbose, biased or fabricated information, human editors play a crucial role in ensuring that the final message is accurate, culturally sensitive, relevant and emotionally impactful.

In an industry where trust is earned in drops and lost in buckets, HAVAS reminds us not only how to use AI ethically, but why. If we do not critically evaluate AI-generated content or its potential impacts, the consequences are costly.

Because while AI can power communication, only humans can build trust.

References

Carah, N., & Louw, P. E. (2015). Media & society: production, content & participation SAGE.

Sutherland, K. E. (2025). Artificial Intelligence for Strategic Communication (1st ed., pp. 304-308). Springer Nature Singapore. https://doi.org/10.1007/978-981-96-2575-8

Justine Kim is a post-graduate student studying a Master of Digital Communications and Culture at the University of Sydney. She is currently completing her capstone course with the Corporate team of HAVAS Red Australia.

Take the next step and reach out today.